Key takeaways:

- AWS is nearing a deal with Constellation Energy, the largest U.S. owner of nuclear plants, to get electricity directly from one of its facilities in the East Coast. Other tech companies are also looking.

- Training AI models, processing AI inferences and using AI applications use more energy than conventional software because they handle a much larger amount of data.

- In some areas, AI data centers could be taking power from the grid, further pressuring supply.

Tech companies are in talks with the owners of nuclear power plants to provide electricity for their power-hungry AI data centers, according to The Wall Street Journal.

AWS has nearly reached a deal with Constellation Energy, the nation’s largest owner of nuclear power plants, to get electricity directly from one of its plants in the East Coast. The alliance follows AWS’ acquisition in March of a nuclear-powered data center in Pennsylvania for $650 million.

Vistra CEO Jim Burke said his company and “many in the industry” have been approached and told, “I need as much power as you can make available.” Vistra owns nuclear, gas and solar plants. The Journal did not name the other tech companies.

Training AI models, processing AI inferences and using AI applications use more energy than conventional software because they handle a much larger amount of data.

The paper said a single AI model can use up tens of thousands of kilowatt-hours (kWh) while more complex models such as ChatGPT can consume up to 100 times more. On average, a U.S. home consumes 900 kWh a month, according to the U.S. Energy Information Administration.

Another example: A typical Google search consumes 0.3 watt-hour of electricity while ChatGPT takes up 2.9 watt-hours. Once Google fully implements AI in search, their electricity demand could rise 10-fold, according to the ‘Electricity 2024‘ report by the International Energy Agency.

Thirsty consumers of electricity

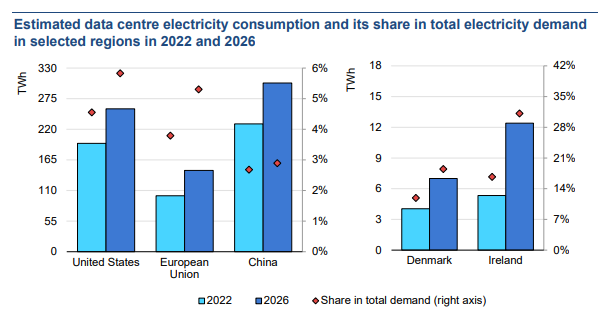

In 2022, data centers consumed 460 terawatt-hours of electricity, comprising nearly 2% of global demand, according to the International Energy Agency. By 2026, data centers are expected to gobble more than 1,000 terawatt-hours of electricity – roughly equivalent to Japan’s use. (One terawatt-hour equals 1 billion kilowatt-hours.)

About 40% of power used in data centers is for computing while another 40% is for cooling the servers. The rest is for the power supply system, storage devices and communications equipment.

Source: International Energy Agency

By tapping nuclear power plants, AI data centers can shave off years in construction because it needs little to no new grid infrastructure. In some areas, however, they would be diverting electricity from the existing power grid, further pressuring supply and potentially raising prices for other customers.

To add supply, the most efficient path is to build plants powered by natural gas, which can operate 24/7 unlike solar and other green energy sources, the paper said. On the other hand, many other regions have an oversupply of power, said Constellation CEO Joseph Dominguez.

An Amazon spokeswoman told the Journal that “to supplement our wind- and solar-energy projects, which depend on weather conditions to generate energy, we’re also exploring new innovations and technologies, and investing in other sources of clean, carbon-free energy.”

Separately, the Journal reported that Amazon is planning to invest $100 billion to build data centers over the next decade, to meet surging AI demand. The e-commerce giant is now spending more on cloud computing than its retail warehouses.

The company said building out its AI infrastructure feels like the days when it was constructing its expansive delivery network. AWS expects to reap tens of billions of dollars in revenue from AI over the next several years.