TLDR

- AI agents that can self-execute tasks for you could become the ‘synthetic workforce’ of the future, where they work with one another, supervise each other and even teach each other.

- But companies aren’t quite sure they are ready to trust AI agents to do things autonomously – yet.

- VC investment and enterprise interest in AI agents, however, are growing.

AI agents could one day form a ‘synthetic workforce’ that can do the tasks of junior to mid-level employees, according to a new report released by Forum Ventures.

AI agents are systems powered by large language models that can reason and execute complex tasks autonomously. That means it can act on your behalf online. Going to an off-site meeting? It will see your schedule and automatically call an Uber for you.

In one to three years, these AI agents will be able to handle increasingly more complex tasks, perhaps also collaborate with one another on larger projects, the report said. The AI agents of the future could also manage other AI agents, acting as their supervisors. These multi-agent hierarchies could also be in a teacher-student configuration, where one AI agent teaches a ‘pupil’ agent.

But it goes beyond doing tasks for you. The report said that one day people might stop accessing websites and apps to do things – they will ask the AI agent to do it for them. That means traditional user interfaces could some day go away as well.

While this capability could radically transform business operations – imagine a host of AI agents acting essentially like a shadow workforce, doing tasks without being explicitly told what to do – it is also scaring companies. Why? They will have to trust an AI agent to do things right all the time.

The trust gap

Companies cite trust as the key issue for their caution about AI agents, according to the report, which polled 100 senior IT decision-makers in the U.S. and interviewed AI innovators and investors.

At present, the technology is in its “early stages” and “we have yet to see meaningful uptake,” according to the report. “Until AI agents can consistently demonstrate their value and mitigate risks, enterprises are likely to proceed cautiously, prioritizing smaller-scale implementations and pilot projects over full-scale adoption.”

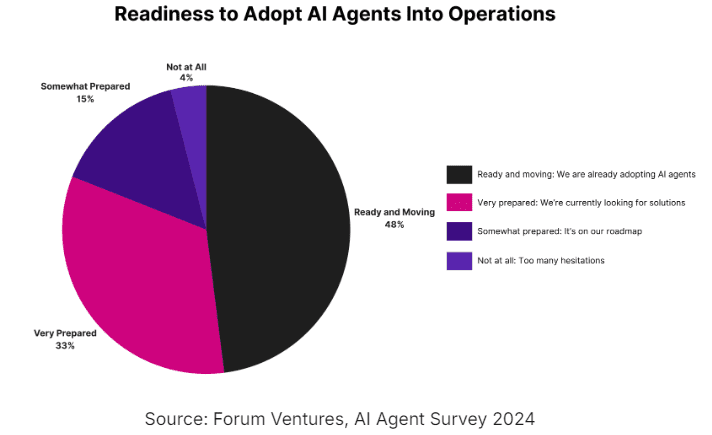

However, venture capital investment in agentic AI has surged and interest in AI agents among enterprises is growing. The survey showed that 48% of enterprises are already adopting agentic solutions and another 33% are exploring solutions.

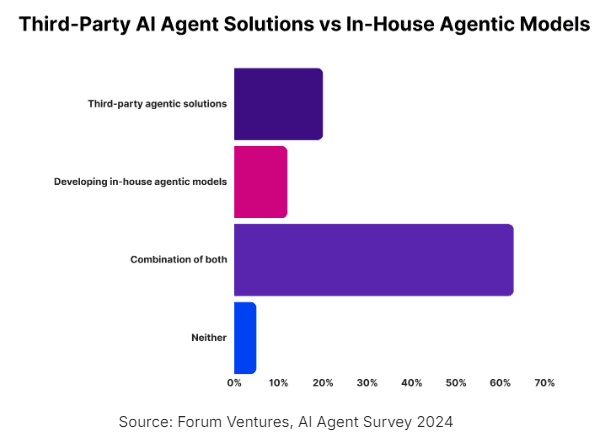

Most of the companies interested in AI agents are using both their internal models and third-party solutions.

Barriers to AI Agent adoption

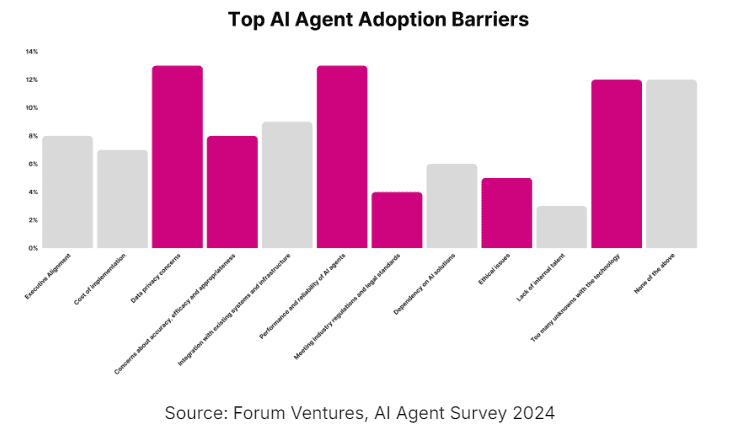

Wariness about trusting the AI agent is the top reason for any company hesitancy in adopting these systems. This goes hand-in-hand with data accuracy and privacy concerns, as well as the performance of AI agents, that combine to give companies pause.

Under the wider umbrella of trust, data privacy concerns topped the list at 13%, tying with performance and reliability. Too many unknowns about AI agents came in next at 12%, while accuracy, efficacy and appropriateness followed, at 8%. The fifth-highest concern was ethical issues, at 5%.

“The trust gap is enormous. While AI agents can perform tasks with remarkable efficiency, their outputs are based on statistical probabilities rather than inherent truths,” said Jonah Midanik, general partner and COO at Forum Ventures, in the report.

Added Tim Guleri, managing partner at Sierra Ventures: “Enterprises need confidence that their data remains secure, and the AI agents’ outputs are aligned with company values and policies. Without these assurances, enterprises will hesitate to deploy AI agents, especially as they become more autonomous. Policies and frameworks to manage this trust gap will be key to accelerating adoption.”

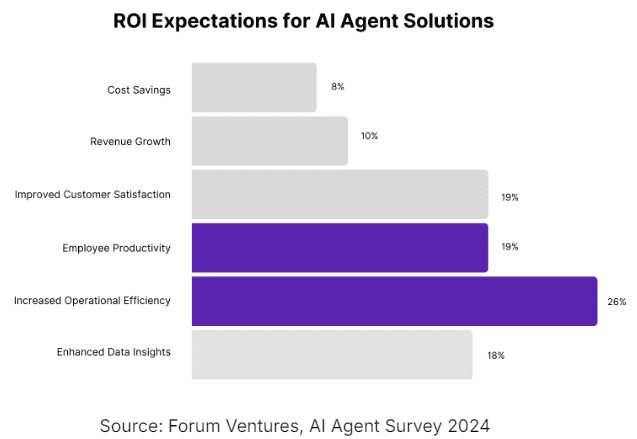

As for return on investment (ROI), companies are looking for it in the following areas:

Advice to startups

For startups developing agentic AI solutions, the report said, they should focus on the following to build trust with their enterprise clients:

Prioritize transparency – Companies need to understand how AI agents make decisions and to trust that these decisions are reliable.

- Provide clear documentation of decision-making processes using explainable AI frameworks that break down how an agent arrives at its conclusions that is both technical and understandable to non-tech stakeholders.

- Offer audit trails that track decision points and offer visibility into how data flows through the system. Regularly updating these documents to reflect changes in the model or decision-making processes will further enhance trust.

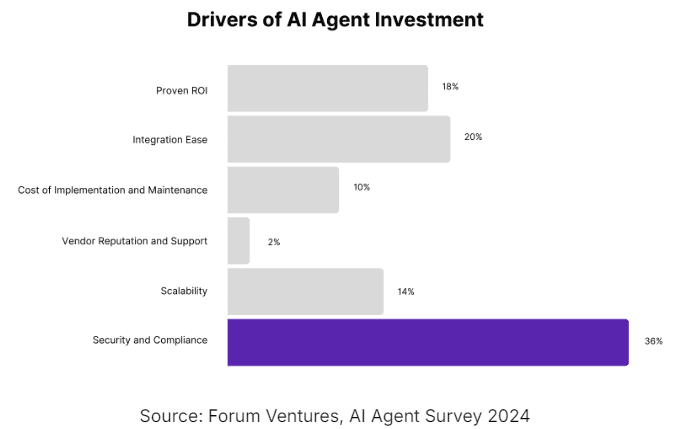

Ensure compliance and security – A third of respondents say security and compliance are the most significant factors influencing their decision to invest in AI agent solutions.

Build a human-in-the-loop framework – Twenty-two percent cited the need to maintain human control over AI decisions, particularly in critical applications, as their top ethical concern with AI agents. For highly regulated industries like legal and healthcare, human review will always be essential.

“Startups should focus on demonstrating ROI through pilot projects, leveraging synthetic data where necessary, and emphasizing scalability,” according to the report. “Enterprises, meanwhile, should take a gradual approach to adoption — starting with small implementations before scaling — while maintaining human oversight to ensure AI agents align with their specific needs, company values, and regulatory requirements.”