TLDR

- AI-powered humanoid robots are advancing rapidly thanks to breakthroughs in multimodal models, with major players like Google DeepMind, Nvidia, Meta and Tesla showcasing robots capable of reasoning, vision and speech comprehension.

- New tools and frameworks, such as Nvidia’s Groot and Meta’s PARTNR, are enabling robots to learn general skills and operate in dynamic real-world environments.

- Despite the hype, real-world deployment faces hurdles like safety, data limitations and cost – meaning human-robot collaboration will be key in the near term, especially for variable or unstructured tasks.

Just a few years ago, the idea of AI-driven robots roaming the streets or responding to natural speech commands might have sounded like science fiction. But today, thanks to breakthroughs in artificial intelligence and multimodal learning, robots are becoming smarter and have more general capabilities.

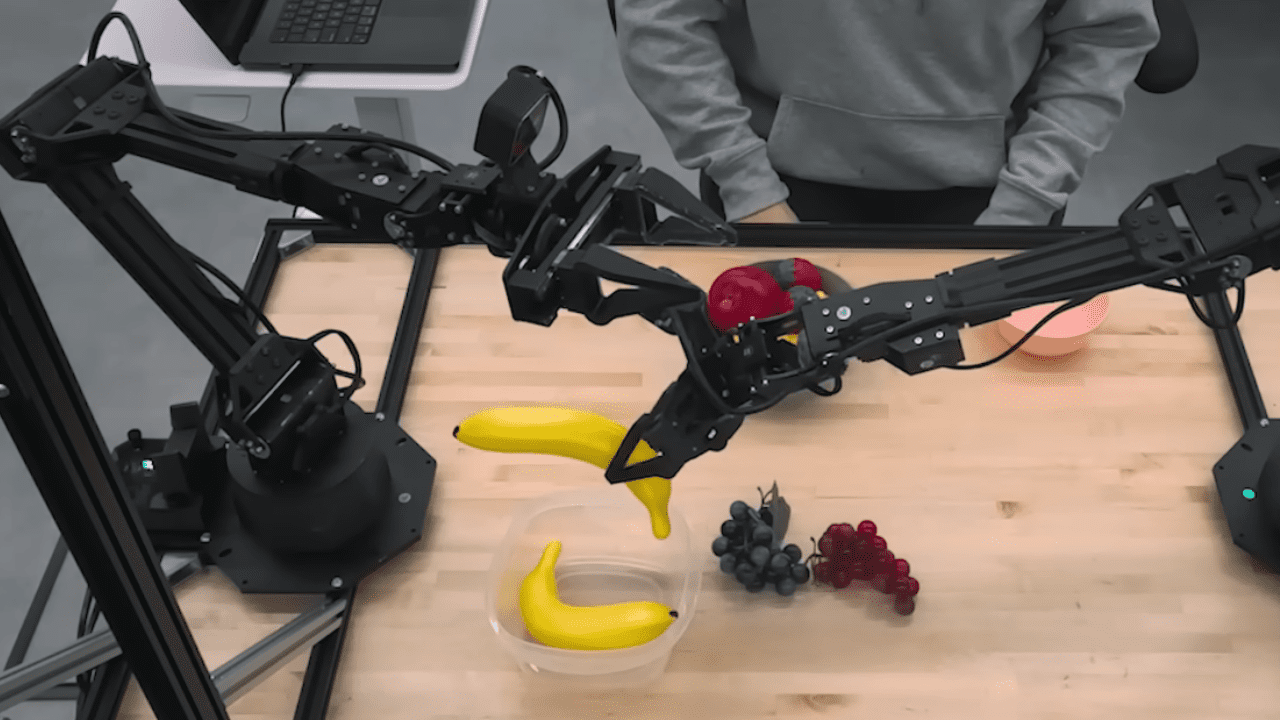

Recently, Google DeepMind introduced Gemini Robotics, an advanced vision-language-action model based on Gemini 2.0 that infuses generative AI into a physical form to directly control robots. In a video demo, a robotic arm was told to place grapes in the clear glass bowl – and it correctly sidestepped the opaque plastic bowl to find the right container. No coding or manual calibration was needed.

At Nvidia’s recent GTC developer conference, the chipmaker unveiled Groot N1, a humanoid robot foundation model that it said brings “generalized skills and reasoning” to humanoid robots.

Nvidia also announced Isaac Groot blueprint to generate synthetic data and Newton, an open-source physics engine for robots being developed with Google DeepMind and Disney Research. A physics engine simulates the laws of physics in virtual environments for robots to train in.

“The age of generalist robotics is here,” said Jensen Huang, founder and CEO of Nvidia. With the Groot models and tools, “robotics developers everywhere will open the next frontier in the age of AI.”

In February, Bloomberg reported that Meta is planning to design hardware and software for robotics. A new team within the company’s Reality Labs division – the one that works on its metaverse ambitions – will start work on humanoid robots that can do household chores. Meta also plans to develop the underlying software and sensors for the robots.

Meta has unveiled PARTNR, a research framework for generalist humanoid robots. It offers a large-scale benchmark, dataset and model to help robots learn how to work with people in everyday tasks. Robots can first train in simulated environments and apply their learnings in the real world.

Tesla is planning to make thousands of Optimus robots this year, according to CEO Elon Musk.

There’s also advances by robotics startups – 1X will start testing its humanoid robot in “a few hundred or a few thousand” homes this year, while Figure AI will be ‘alpha-testing’ its humanoid robots, according to TechCrunch. Meanwhile, former Cruise CEO Kyle Vogt’s new robotics startup, The Bot Company, has raised $150 million in its latest round for a $2 billion valuation, according to Reuters.

Even OpenAI is getting into the act. Caitlin Kalinowski, a technical staff member at OpenAI, said the startup is in a “discovery phase” in robotics, during a panel at the recent HumanX conference. Kalinowski’s job is to explore how OpenAI’s AI models might be useful for the “robotics age.” Kalinowski added that multimodal AI models are a “big breakthrough” in that they can see the world, understand speech and comprehend the environment for context.

“AI is going to be incredibly transformative for robotics. It’s going to enable mechatronic systems to do things that we haven’t really conceived of them being able to do before,” said Brad Porter, founder and CEO of Collaborative Robotics who had led robotics at Amazon, at the recent HumanX conference.

However, when it comes to practically deploying these humanoid robots, “you can’t really declare it’s safe in these environments,” Porter added. What robotics makers need is more data for training, and foundation models where robots can self-learn with just one or even no examples.

“Jensen (Huang) described physical AI as this next step … after agentic AI, and I think that’s right. We’re going to see the AI get better and better,” Porter said. But as for the timeline, “it’s a little like self-driving. The timeline might take us a little longer than the videos (of robots doing general tasks) seem to imply. But when we get that, it’s going to be incredibly transformative.”

Erik Nieves, CEO and cofounder of Plus One Robotics, believes that these generalist humanoid AI robots won’t work in warehouse settings.

“For the foreseeable future, they’re far too impractical and expensive to replace warehouse workers. Any and all robots working on variable tasks will routinely experience circumstances outside their realm of expertise that necessitate intervention or cooperation from a human co-worker.”

“These emerging AI robots will only be successful to the degree that they are parts of human-robot teams, not standalone robots,” Nieves added. “Of course, when we revisit this conversation in 10 years, things might have changed entirely; that’s the exciting part of this field, all these technologies are trying to rise to the challenge of less structured tasks.”