Losing weight isn’t what it used to be. AI is changing how people track their food, get exercise recommendations and supercharge fitness coaches to say the right thing at the right time to motivate their clients.

Noom, the psychology-based weight loss and wellness program, has started rolling out an AI-powered body scan – at no additional charge – that more accurately measures body size, fat percentage, muscle mass and more. Consider that people get discouraged when the scale doesn’t budge because they are actually losing fat but gaining muscle. Now the body scan can show you what muscles you’ve gained.

Noom believes its body scan also is one deterrent against weight regain in people who eventually get off GLP-1 (glucagon-like peptide-1 with brand names like Ozempic). This is the hormone that helps regulate blood sugar, appetite and digestion and is shown to precipitate sharp drops in muscle mass as well as fast weight loss. The problem is when people get off GLP-1 drugs, which studies show that most eventually do, they will regain their weight in part due to lost muscle mass.

This week, Editor-in-Chief Deborah Yao sat down with Noom CEO Geoff Cook and Prism Labs CEO Steve Raymond to talk about their partnership on the AI body scan and how AI is changing wellness.

The AI Innovator: Tell me about your partnership.

Noom CEO Geoff Cook: Noom, in December of last year, launched a new GLP-1 companion program to provide 1,000 recipes for protein-eating, and 1,000-plus resistance training workouts sourced by an obesity-boarded doctor. And the other side of the coin to the massive weight loss that people experience on GLP-1 is that a good portion of the lost weight is lean mass, including muscle mass.

Noom is a behavior change program that has always measured weight through the scale, and the weight graph is one of the main ways members on Noom really track their progress, but it’s also just one part of the story. We were looking for a way (to enable GLP-1 users to more accurately) track their progress – if they really were seeing a decrease in fat composition, and whether they are sustaining lean mass.

We were looking for a solution like that, kicked the tires on a few different companies, and came to find Prism. One of the things that we liked about this (AI-powered body scan) technology is that, unlike some others that require two photos, one facing (the camera) and a side profile, Prism’s solution requires a 10-second video, which will take much richer data and therefore give more accurate analyses.

What we announced recently is that all members of Noom will soon be able to access the body scan right inside of Noom. With each scan, they’ll receive a personalized (analysis that) will help explain metrics like fat composition, lean mass, among a host of other metrics, including like waist-to-hip ratio and waist-to-height ratio. We’ve just started the rollout, but we’re got some pretty good excitement from members for it.

How many times do you have to do this scan?

Cook: The member can do it as many times as they like. We recommend, I think, once a month, as part of the tracker suite on Noom. We have AI-enabled food tracking. You just take a picture of your food and it will log it for you. The weight graph, of course, is another important paradigm, in order to enrich the data we have on each user so that we can recommend better advice.

And actually, one of the things that we’re doing with it, and I haven’t mentioned it publicly yet, is we’re looking at ways to leverage it in order to establish the BMR, the basal metabolic rate, in order to have even more accurate calorie budgets, because the amount of calories you might consume really does vary with your body composition. So we’re looking at going even deeper, not just to tell you these stats, but then to change the recommendations you may get based on the new stats.

Do you charge extra for this service?

Cook: There’s no extra charge. It’s included as part of the new service.

Steve, how does this thing work? It sounds magical.

Prism Labs CEO Steve Raymond: Well, it’s magical. It wouldn’t have been possible five years ago without advances in machine learning and AI techniques on the computer side. Computer vision tended to be, in the past, like a lot about angles and geometry and sort of measuring things. And there was an upper limit to how accurate and precise you can get using that.

So we started with (this question), what’s the optimal user experience? That means one person who can do it by themselves, it takes less than 10 seconds, there’s minimal interaction with the camera. You level your phone, you hit start, you spin around, and you’re done. We started from there, and then we said, ‘Well, what do we need to build – from a software standpoint – to be able to make that as precise and accurate as any other body composition methodology?’ We had to solve some really hard problems with that, and we did that with neural networks. There’s over 10 neural networks in the pipeline that we use to get to where we are on a precision standpoint.

Why is video better, technically speaking, than images?

Raymond: Well, the thing about images is that the user has to interact every time. So if you want to get 20, 30, 40 or 50 images, it would take you a lot of time. It might be frustrating. You’d have user error, etc. So with a video, we’re getting 150 photos that are part of the payload. And then we’re using AI (on) a much richer data set. From a challenge standpoint, we’re actually measuring a moving body. When you move, you deform – you’re not always consistent. That’s one of the big challenges, and why no one’s ever been able to do it before. With machine learning and AI, we’ve crossed the Rubicon, and we’ve validated (our system in) the laboratory that it’s 40% more precise than a two-photo system.

Cook: I’ve done a scan myself a bunch of times. Each time it had my waist within one inch – so quite accurate. And each time it actually knew my right bicep was larger than my left – only by 0.3 inches. But that makes sense since I’m right-handed. The various folks inside of Noom who use it were kind of blown away by the tech.

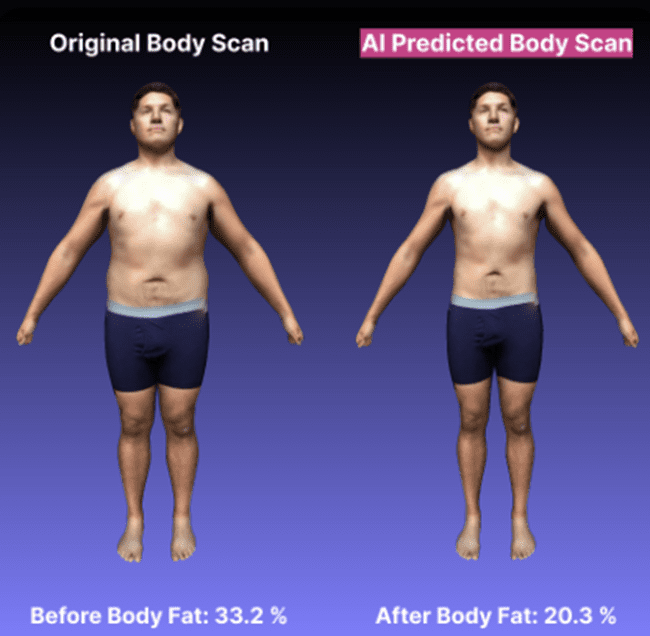

Raymond: We don’t like just the precision. We’re in the business of helping Noom motivate people, and we’re giving people a very rich visual palette to look at as well. So when you look at your avatar, it looks like you. It doesn’t look like a blob that’s roughly your size. It looks like you because we’re doing a reconstruction. It’s very recognizable as you.

One of the features in the product is called ‘Future Me.’ We’re able to predict what you’re going to look like when you reach your goals. This is the first time that’s ever been possible, and we’re really excited to see how that helps people be motivated to stay on their journey.

What does having more accurate measurements do for your health?

Cook: If you don’t know your size and composition or your lean mass composition, it becomes very difficult to improve. If you look at various studies as it relates to longevity, there’s a very real correlation between body fat composition and health span and lifespan, especially with respect to visceral fat composition, especially around the midsection. If people can’t visualize that or measure it, it’s just difficult to resolve.

Raymond: There’s this thing that happens when everybody starts: You’re out of shape and you decide to get in shape, and so you start to change a lot of stuff. You could be working out more; you could be eating less. You could be foregoing a piece of chocolate cake, whatever it is that you’re doing (to lose weight). And the number on the scale may actually not be going down because you’re putting on muscle.

(With the body scan), there’s this intervention that can happen when you didn’t lose the 10 pounds that you wanted to, but your lean mass stays the same. So a lot that you lost was body fat and that can be really empowering as well. When you talk to people who are really into metabolic health, they’re always very frustrated that they’re stuck with BMI as a measurement because everybody knows you can be healthy at a high BMI and you can be unhealthy at a low BMI, and this sort of cracks that.

How does the body scan fit into the Ozempic craze or similar drugs?

Cook: Folks who are on GLP-1 can lose as much as 40% of their weight … in muscle, and that’s actually a pretty extraordinary number. You might see more like 16% to 20% on a non-medicated weight loss journey. … The very fast weight loss that folks on GLP-1 can encounter can be damaging because if you lose that much muscle mass, and then later come off of the GLP-1, what you risk is weight regain. (The vast majority of folks – two out of three – will stop using the GLP-1 by month 12.)

You will regain that lost weight in all likelihood, unless habits have changed, and you could end up with a higher fat composition a year or two later than when you started. That condition can actually become sarcopenia, which is a higher mortality and describes someone with very high fat composition and leads to other conditions like frailty. So it’s actually critical for someone on GLP-1 to mind their muscle mass, to be engaging in resistance training and getting protein because they’re not going to want to eat as much.

Tell me about the data set you used to train this body scan system.

Raymond: Our technology team has been together a long time and we came from the hardware business, so we’ve had previous hardware products that have allowed us to see a lot of people as they go through weight loss journeys, muscle-building journeys – data sets of hundreds of thousands of people. We’ve also spent a lot of time in validation, and so we work with Texas Tech University to have an independent validation, and they bring in a lot of people, targeting people of different weights and ages to try to get a very diverse set of bodies that we can go in and calibrate against other measures for establishing body fat.

So we have big data sets for training. We also have synthetic data generation, which we make quite a bit of use of, because some of the things that we’re doing are to separate the body from the foreground, background and things like that. We can use synthetic data for some of the tasks there. But it is really all about having a team that has paid attention to making sure that when we have the data, it’s anonymized, it’s labeled and categorized correctly, so that when we find a new business problem to solve, we have the data sets that are ready to go. We know how to use them.

Is the body scan just available in the United States?

We’re in the process of the rollout right now. … It will start out in the U.S. and in the U.K., where we have our biggest audiences.

What’s next for both your companies?

Cook: This is very much a part of the other innovations we’ve been rolling out, like the AI food logger, which makes it very easy to track your food. We’ve rolled out an AI-enabled chatbot that we call Welli to give you actionable and useful insights in the moment. We’re doing a lot with AI-assisted coaching right now, helping our coaches surface the right data to … present it to a coach in the right moment in order to potentially say something that might affect (a user’s) motivation.

Looking forward three to five years, I think what this is all about is transforming the health care system from one of sick care, where we wait for people to get sick and then we treat them, to one of creating personalized programs for preventative care. We want to stop the chronic disease before it starts. … I can imagine doing that in a number of different ways, consistent with how we’re thinking about it, by bringing technology to bear such as interventions like biologics.

You can imagine molecular interventions, but certainly peptides, with respect to GLP-1s. It’s going to be a very fast evolving space over the next three to five years, where you’re probably going to see generic semaglutide, which would bring down its cost dramatically, but you’re also going to see entry from half a dozen other drug manufacturers, phase 1 or phase 2 trials. … It’s going to be a very dynamic space over the next five years,

Raymond: There’s a lot of talk about AI (in health care) but it’s still all rather nebulous. What you’re seeing is practitioners like Prism Labs start to build foundation models and very narrow, application-focused areas. … There’s going to be other foundation models coming up in continuous glucose monitoring, with blood and blood panels, and lots of people are going to be building similar little knowledge bases centered around all of those. It’ll start to get really interesting when all of these different models are talking to one another and talking to these health agents.

When a person comes walking in the door, … (you) can, in 10 seconds, know what their lean mass is. It’s going to tell me a lot about what their risk levels are. And then I can say, ‘Have we tested their triglycerides? Do we know that number?’ … We’ll train a lot of really interesting foundation models over the next five years, and then they’ll start talking to one another.

For the body scan feature on the Noom app, is the data stored at the edge or in the cloud?

Raymond: It’s a combination. We spent a lot of time working with the engineering teams and product teams and legal teams at Noom to make sure that you know the privacy policy of their opt-in consent for every user, so you know exactly what it is that they’re doing and what kind of data that we’re getting. We don’t receive any personally identifiable information about users. We don’t know whether they’re a trial user, what they’re paying, or what their zip code is, or things like that, at this point in time.