TLDR

- It is not unilaterally true that generative AI is “expensive” to use, according to Andrew Ng, co-founder of Google Brain and Coursera, and founder of DeepLearning.AI.

- After the foundation models – like OpenAI’s GPT series that underpin ChatGPT – are pre-trained, which can cost billions of dollars, developers can build atop them for much less.

- Token prices have plunged as well and will continue to decline.

There is a pervasive “misconception” that generative AI is unilaterally “expensive,” according to Andrew Ng, co-founder of Google Brain and Coursera, and founder of DeepLearning.AI. While it is costly to train cutting-edge foundation models, such as OpenAI’s GPT series, and some companies spend billions of dollars to do so, building AI applications on top of them need not be costly.

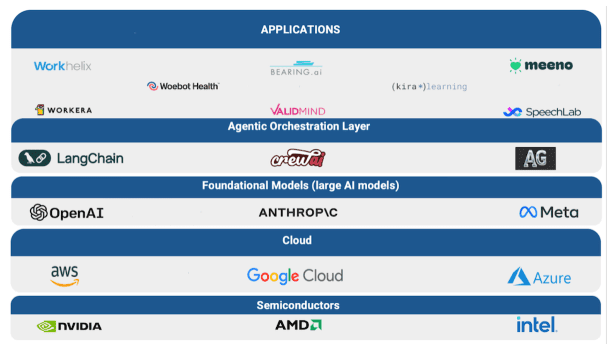

To understand the economics, one must first look at the AI stack. It consists of semiconductors at the bottom layer, with chipmakers such as Nvidia, AMD, Intel and others playing in the space. Above the chipmakers are the hyperscalers or cloud providers such as AWS, Azure and Google Cloud.

Above the cloud providers are foundation model developers: OpenAI, Anthropic, Meta and others. The orchestration layer, which manages interactions between AI models and other services, is next. This is the layer that is increasingly incorporating agentic AI – AI bots that tap LLMs and other AI bots to do tasks for the user. At the top are AI applications aimed at the end user.

The foundation model layer is one that typically grabs headlines for being expensive – and it is costly, wrote Ng, in his latest newsletter to subscribers. But building AI applications on top of them is not, since the expensive work of pre-training these models has already been done.

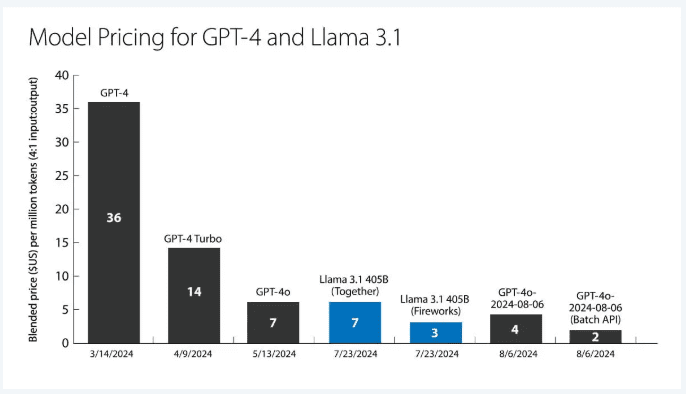

Moreover, the price of large language model tokens has been falling “dramatically,” Ng said. For example, OpenAI’s GPT-4o token prices dropped by 79% over 17 months ended Aug. 28, 2024, assuming a blend of 80% input and 20% output tokens. (AI applications tap LLMs to generate results for the user’s query. Both the queries posed to the LLM, and its output to the user, are measured in tokens.)

Why are prices dropping? The entry of free-to-use, open-source and open-weight models like Meta’s Llama is one factor, Ng said. That means developers of AI applications don’t have to worry about training their own foundation models; they can use the open-source ones available. Further, hardware innovations to enable faster processing times and improved efficiency will also drive down costs.

For example, Ng said he spent the Thanksgiving holiday weekend prototyping different generative AI applications, and his bill from using OpenAI API calls came to only about $3. On his personal AWS account, his recent monthly bill for prototyping and experimentation was $35.30.

However, agentic AI will be more expensive. Since this is a structure where AI bots interact with other models and APIs to do tasks, there is a higher token usage among them before the user sees a result. But Ng believes that as token prices continue to drop, agentic AI structures will become economical.

Even now, some agentic AI structures are already quite reasonably priced. Ng posits that if you build an application to help a human worker, and it uses 100 tokens per second continuously, at GPT-4o’s price of $4 per million tokens, the cost comes to only $1.44 per hour, which is far lower than minimum wage.