TLDR

- Meta introduced its first multimodal models: Llama 3.2 in 11 billion and 90 billion parameters. It also unveiled small models meant for edge computing in 1 billion and 3 billion parameters.

- Meta unveiled Orion, AR glasses that look like regular glasses. It is a product prototype for now, but Meta plans to improve upon it and eventually sell it to the public.

- Meta also upgraded its Meta Ray-Ban smart glasses’ AI capabilities and unveiled a cheaper Quest 3S headset.

Mark Zuckerberg has been busy.

At Meta Connect 2024, the CEO of Meta (formerly Facebook) unveiled the company’s first open-source multimodal models – as well as brand new augmented reality glasses.

First, the models. Called Llama 3.2, this set of four AI models comes in 1 billion, 3 billion, 11 billion and 90 billion parameters – or model weights used to generate output. They each have context windows of 128K, or enough space for a prompt consisting of 96,000 words.

The 11 billion and 90 billion models are multimodal, adding vision understanding to the text-only models (Llama 3.1) they are replacing. These larger models can understand images, such as charts, and explain what they see in natural language. For example, by looking at a sales chart, it can answer a question about which month in the prior year a small business had the best sales.

The 1 billion and 3 billion models are text only and small enough to fit onto mobile devices and those at the edge of the network that process data locally.

As open source models, the Llama models are generally freely available to use and fine-tune (customize), especially for researchers, while commercial users face some restrictions. Llama models can go toe-to-toe with rival proprietary models, including OpenAI’s powerful GPT-4o, giving researchers and developers free access to frontier-level models.

Meta also unveiled its first Llama Stack distributions to simplify how developers work with its models whether they are single-node, on-prem, cloud or on-device.

The models can be downloaded via llama.com or Hugging Face, as well as through Meta’s cloud and tech partners AMD, AWS, Databricks, Dell, Google Cloud, Groq, IBM, Intel, Microsoft Azure, Nvidia, Oracle Cloud, Snowflake and others.

AR glasses that look like glasses

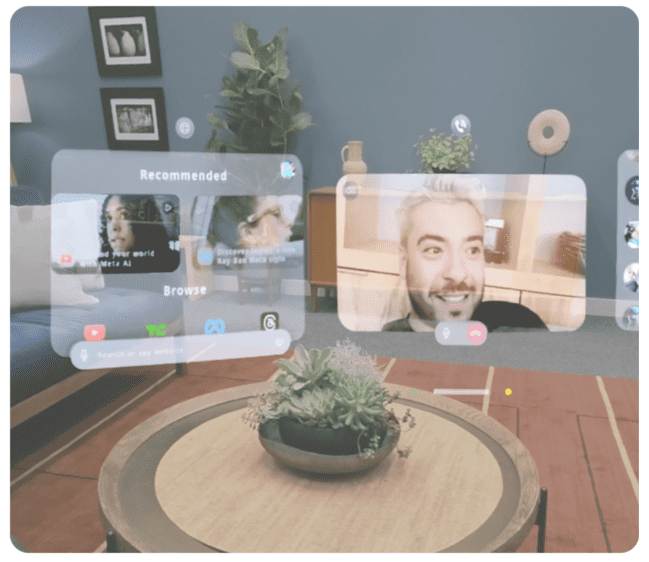

Zuckerberg also introduced Orion, its first AR glasses in the form of regular glasses. (Meta already offers AR in its Quest headsets.) Orion lets users see virtual objects in 2D and 3D layered onto real-world settings so you can do things like open a browser to work, do a video chat, play games and watch movies, among others. The glasses weigh 100 grams or 1/5 of a pound.

The glasses also have Meta AI, the company’s AI assistant. It can understand the world around you. For example, look at a group of ingredients and ask Meta to identify them or suggest recipes.

Meta said Orion is a product prototype that it intends to one day sell to consumers. The company will continue to improve it before it is ready for public use.

Zuckerberg also announced that Meta’s smart glasses, which were a collaboration with EssilorLuxottica, the maker of Ray-Ban, will get upgraded AI capabilities including real-time speech translation. Its embedded Meta AI will also help you remember things, like where you parked.

Meta unveiled Quest 3S, its cheapest AR/VR headset to date, priced at $299. The product has comparable abilities as Quest 3 and is meant to entice users of Quest and Quest 2 to upgrade. Users can watch a concert in Horizon Worlds’ Music Valley in the metaverse, or watch movies on Prime Video or Netflix in HD, and even work out in a virtual environment.

Meta said it is dropping the price of Quest 3 (512GB version) to $499.99 from $649.99.