Key takeaways:

- Meta unveiled Llama 3.1 405B, its largest language model and the most powerful open-source foundation model in the market.

- CEO Mark Zuckerberg said he chose the open source path because Meta was late to the LLM party and this was a way to set the industry standard and dominate. He also said he believes in open source philosophically.

- Nvidia CEO Jensen Huang said client excitement over Llama 3.1 405B is “off the charts.”

Meta, the parent of Facebook, recently released Llama 3.1 405B, its largest and most capable foundation model yet that performs on par with the best proprietary AI models like OpenAI’s GPT-4o. It is the most powerful open-source foundation model with weights that are openly available.

At 405 billion parameters, Llama 3.1 405B substantially improves upon Meta’s earlier generation of models, Llama 2, which tops out at 70 billion. It matters because the more parameters language models have, the better its capabilities. It has a context window of 128K tokens (roughly 96,000 words for the prompt) and supports eight languages. Meta also released 8 billion- and 70 billion-parameter versions.

“Last year, Llama 2 was only comparable to an older generation of models behind the frontier,” wrote Meta founder and CEO Mark Zuckerberg, in a blog post. “This year, Llama 3 is competitive with the most advanced models and leading in some areas. Starting next year, we expect future Llama models to become the most advanced in the industry.”

Zuckerberg compares Meta’s decision to open-source AI models to the early days of Linux, a free operating system that eventually became the industry standard for cloud computing and operating systems for mobile devices. Linux gained popularity for letting developers modify its code, it was affordable and later on became more advanced and secure due to the community improving upon it.

The World Wide Web, of course, is free and open with its source code publicly available. “I believe AI will develop in a similar way,” Zuckerberg said.

Dislike of Apple’s closed ecosystem

There is another reason why Meta chose the open source route. At this week’s SIGGRAPH 2024 conference, in a fireside chat with Nvidia CEO Jensen Huang, Zuckerberg said that Meta got started in large language models later than other tech giants. So Meta decided to open source its models in hopes of becoming the industry standard and dominate in this sense.

In his blog post, Zuckerberg noted that Apple operates in a closed ecosystem while Microsoft, though still proprietary, is “much more open” and thus Windows became the leading operating system in PCs.

He also said Meta’s experience with Apple – Facebook, Instagram and WhatsApp are part of the App Store – limits how his company can build services given the “way they tax developers, the arbitrary rules they apply, and all the product innovations they block from shipping.” In 2021, Apple made changes to iOS 14.5 that required apps to ask users to opt-in if they want to be tracked. Meta disclosed in an earnings report that this rule change cost the company $10 billion in ad revenue in 2022.

“On a philosophical level, this is a major reason why I believe so strongly in building open ecosystems in AI and AR/VR for the next generation of computing,” Zuckerberg wrote.

Llama 3.1 405B may be downloaded for free on Hugging Face or llama.meta.com; organizations with more than 700 million monthly active users must get a license from Meta. What’s new: Developers can now use the outputs from Llama models to improve other models. Both pre- and post-training models and their weights are made available. The training data has a cutoff date of the end of 2023.

Llama models can also be accessed through the platforms of over 25 Meta partners, including AWS, Nvidia, Databricks, Groq, Azure, Google Cloud and Snowflake. Users can try Llama 3.1 405B on WhatsApp and at Meta.ai.

Nvidia’s Huang said client excitement about Llama 3.1 405B has been “off the charts.” He particularly appreciated the updated safety and security components Meta released alongside Llama 3.1: the Llama Guard 3 and Prompt Guard, which are part of a full reference system. Meta wants to develop what it calls the Llama Stack – a set of standardized interfaces for fine-tuning, synthetic data generation and agentic applications – to aid interoperability across the ecosystem. Here is its request for comment.

More new capabilities are coming: Image, video and audio capabilities are still in development and will be introduced later to Llama 3.1.

How Llama 3.1 405B stacks up

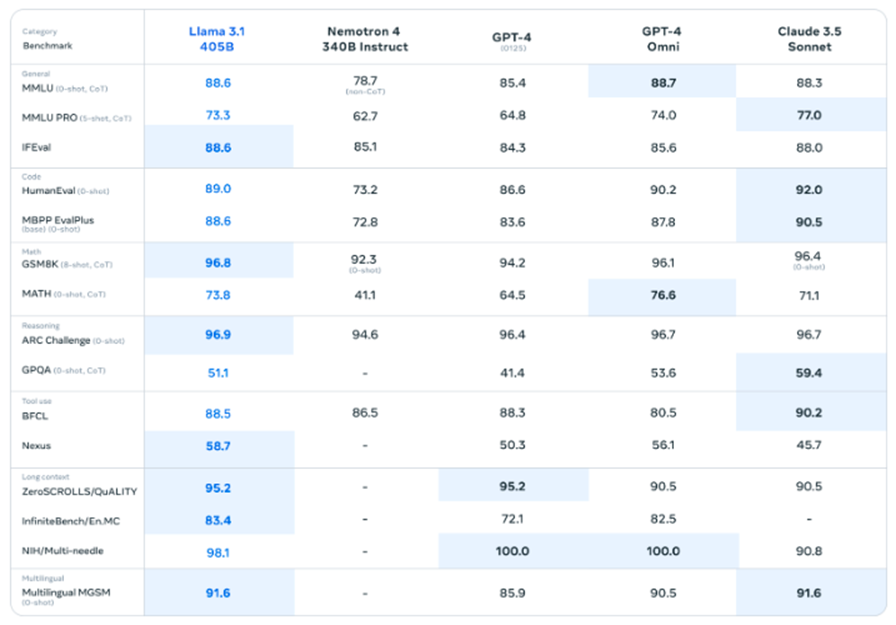

Meta released the performance of Llama 3.1 405B across many capabilities including general knowledge, math, tool use, multilingual translation, and steerability. Researchers then compared the results to OpenAI’s GPT-4 and GPT-4o, and Anthropic’s Claude 3.5 Sonnet.

“Until today, open source large language models have mostly trailed behind their closed counterparts when it comes to capabilities and performance,” according to a Meta blog post. “Now, we’re ushering in a new era with open source leading the way.”

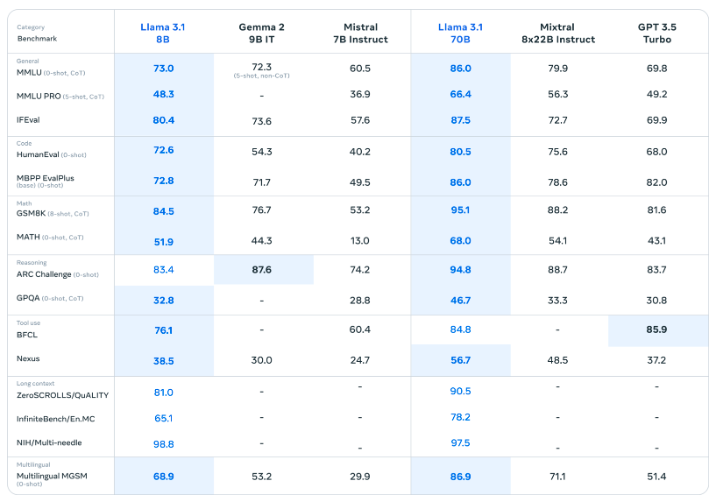

Llama 3.1 versions in 8 billion and 70 billion parameters, which boast 128K content windows, also performed well against popular models of similar size.